In today’s world, we’re hearing more and more about the power of Artificial Intelligence (AI). But with new technology comes new questions, especially about security and privacy. The Einstein Trust layer, as part of Agentforce, is designed to address these concerns.

What is the Einstein Trust Layer

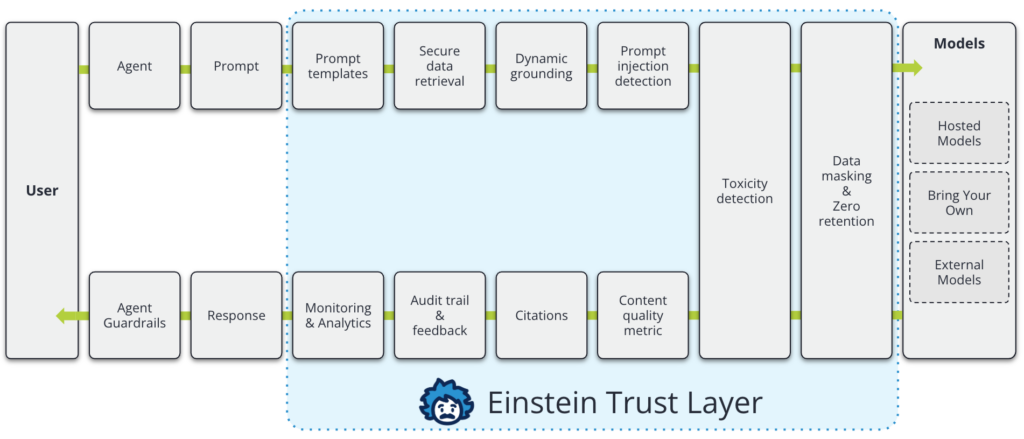

The Einstein Trust Layer acts as a kind of guardian, carefully managing the flow of information when you use Agentforce within Salesforce.

Think of it like a secure journey for your data broken down into four key steps:

1) Instructions

The user supplies instructions to your Agent, which must be transformed into a prompt for the AI model. To enhance the likelihood of success for the AI model, this information is grounded in data from your systems to provide additional context. This ensures that the AI possesses the appropriate context. It is then checked for possible harmful content, such as language or code that attempts to make the Agent bypass security. This is important, as it will impact the output of the response.

2) Zero Data Retention

To ensure that your data is safe, any sensitive data, such as personal information, is masked before it is sent to the Large Language Model (LLM). This guarantees that the actual data is concealed and replaced with placeholder text, thereby preventing the AI model from accessing your private information. The Einstein Trust Layer also explicitly instructs the LLM to delete both the prompt and its response to ensure that no data is retained while obtaining the most accurate response.

3) Quality Checks

Once the LLM has generated its response, it is processed through the return path of the Trust Layer, where placeholders are restored to their original state, and the response undergoes another check for potentially harmful content during the toxicity detection stage. This ensures that the output is both appropriate and non-harmful. Furthermore, the output is assessed against your guardrails to ensure that no rules you have established have been violated.

4) Response

Finally, the response is presented to the user. If, at any point during the process, the response is flagged as incorrect or in violation of any rules, Agentforce will not display the response to the user. Instead, it will provide a holding response or request further information to ensure it can obtain the correct answer.

The Einstein Trust layer in action

This short video outlines in more detail some of the key elements of the Einstein Trust layer.

In Summary

Many companies are hesitant to use AI because they don’t fully trust how their data is being used, and that’s understandable. The Einstein Trust Layer aims to change that by adding multiple layers of protection so you can make the most of AI without worrying about compromising your sensitive data.

Interested in learning more about how Agentforce can support your business while safeguarding your data with the Einstein Trust Layer? Visit our Agentforce Hub to explore further content and arrange a call with one of our Agentforce experts.