Dependency injection using Custom Metadata Types is a great way to write code on Salesforce that is easily extensible. Even if you never actually end up extending your design(a customer who doesn’t change/extend the requirements? Hmmm… maybe never?), it helps to separate concerns and forces the dependencies to be broken up in a way that fits nicely with a DX view of your code as a collection of packages.

Andrew Fawcett has recently been working on a generalised library for dependency injection in Salesforce, Force DI, which he has blogged about. We’ve been using a similar technique at Nebula for some time now, in all kinds of situations. In the course of writing a new application with dependency injection, I used a technique to create object instances, with parameters in a nice generic way. I can’t even tell if I picked up this idea from a StackExchange post, or came up with it myself. Either way, it’s worth sharing…

The Application: Scheduled Job Manager

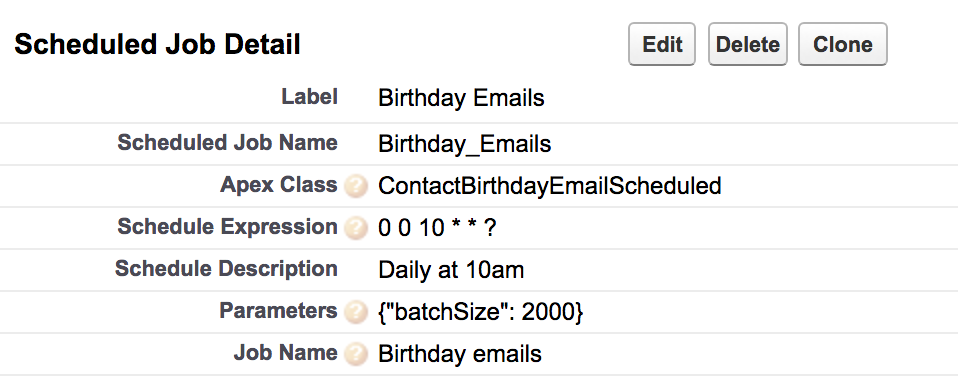

The application is a generic Scheduled Job Manager. I dislike the current situation for scheduled jobs because, once you’ve started them, you lose any parameters that were used to start them. For example, a common scenario is to have a scheduled job start a batch, and include a parameter to say what the batch size should be. If you start the scheduled job from the developer console, the batch size is not recorded anywhere that you can see it (unless you code something up yourself for this purpose). The Scheduled Job Manager is driven by custom metadata records which tell it how to start the batch i.e. which Schedulable class to run; what Cron string to use; what parameters to pass to the class when it is created etc. It solves the problem of recording scheduled job parameters once and for all.

Suppose we have a scheduled job to send out birthday emails to our Contacts. The scheduled class may look like this:

public class ContactBirthdayEmailScheduled implements Schedulable {

private Integer batchSize;

public ContactBirthdayEmailScheduled() {}

public ContactBirthdayEmailScheduled(Integer batchSize) {

this.batchSize = batchSize;

}

public void execute(SchedulableContext sc) {

Database.executeBatch(new ContactBirthdayEmailBatch(), batchSize);} }

Without the Scheduled Job Manager, you would start this job in production by running it in the developer console e.g.

System.schedule('Send birthday emails', '0 0 10 * * ?', newContactBirthdayEmailScheduled(2000));

Then, six months later, it starts failing because someone has added a load of Process Builders to Contact and the batch size needs to be reduced. At which point you have to ask yourself, “What batch size did I type six months ago? How much smaller do I need to make it? Do I feel lucky?”. Most likely, you don’t remember the previous batch size, so you take a guess and see if it works. With the Scheduled Job Manager, we do know the batch size because it’s recorded in the custom metadata e.g.

Applying Parameters

To implement the Scheduled Job Manager, we know that we can construct an instance of the Schedulable class in the following way:

Type scheduledClassType = Type.forName(thisJob.Apex_Class__c); Schedulable scheduledInstance = (Schedulable)scheduledClassType.newInstance();

But how can we pass parameters into the Scheduled class in a generic way? One option, used by the Force DI package mentioned above, is to externalise the problem with another dependency injection. In Force DI, a Provider class name can be provided instead of the class you actually want to construct. That Provider has a newInstance(Object params) method which knows what parameters it is expecting, and knows enough about the type you want to construct to be able to set the parameters correctly. (I guess the Provider could be the class itself)

The Provider method of Force DI is neat. But there is an alternative. You can just use JSON.deserialize() to directly fill in the values instead i.e.

Type scheduledClassType = Type.forName(thisJob.Apex_Class__c); Schedulable scheduledInstance = (Schedulable)JSON.deserialize

(thisJob.Parameters__c, scheduledClassType);

JSON deserialisation allows you to do some things that are not possible in any other way in Apex. It can bypass the access level of attributes to set them at object-creation (i.e. it can write to private attributes). It can write to attributes in a generic way. By contrast, if I have a Map<String, Object> of attribute names mapping to values, and I want to set on my class, Apex doesn’t provide the reflection capability to loop through them to set all the attributes. You would need one line of code per attribute. JSON deserialisation solves this for us.

There are some disadvantages – you bypass the constructor, so any processing that it might do while setting the attributes is also bypassed. It only works for simple types. But, where it works, it is so simple.

Two final thoughts:

The Provider approach is not incompatible with this. You could write a Force DI Provider using the JSON method which could be used as the default provider for most cases, then write more complicated Providers where they are really necessary.

Please have a look at this Idea I posted to Allow Custom Metadata Types to have a metadata relationship with Apex Classes. It would make all this a little bit tidier.